“(The new Law) will require companies that use automated systems like chatbots to disclose when their customers are interacting with the technology as opposed to a human”

Companies using advanced AI technology in their recruitment efforts are already striving to make the hiring process more transparent, but legislators are beginning to make those efforts mandatory. Regulations passed in September by the California State Legislature is the most recent example of legislation catching up to advancements in technology. At its most basic, the law, which takes effect in July 2019, will require companies that use automated systems like chatbots to disclose when their customers are interacting with the technology as opposed to a human.

Like many efforts that place stipulations on technology, this new set of regulations has broader implications than the specific problem it targets. The intent, as the co-authors have stated, is to clamp down on bots that are used for deceptive commercial or political practices. However, the law’s disclosure requirement encompasses far more than just these intended targets. Any company using bots or AI-powered systems to interact with customers will now need to offer a disclosure in some form or another.

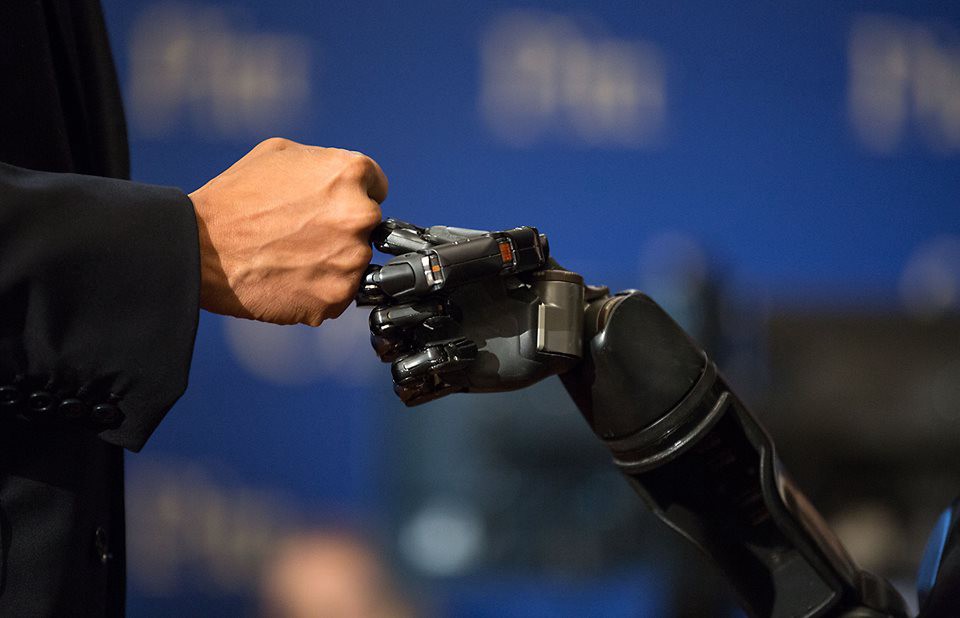

The change is, in a way, ironic. The promise of AI technology for years has been that humans could interact with systems that are so smart, it’s nearly impossible to tell whether or not they are speaking with a robot. In fact, the Turing Test — the longtime standard for determining true AI — is based upon this blurred concept of human versus machine. Companies that use the technology to interact with candidates were likely attracted to it in the first place because of its ability to provide a human touch in an ultimately automated process.

However, California’s new rules are not likely to be the last. Companies considering adopting chatbots in their recruiting efforts — or those that already have the technology in place — need to begin thoughtful conversations about how they can comply with this and similar legislation in the future. While a more transparent disclosure of candidate interaction with bots is not inherently a bad thing — companies should always strive for more transparency in recruiting — it will require some changes to how the technology is deployed and maintained.

Companies whose recruiting efforts include bots that interact with candidates and employees — or those that are considering the tech in the near future — should consider the following:

Make sure the technology is agile

New laws and regulations often require companies to make significant changes to their processes. In the wake of California’s new law, companies that use chatbots or virtual assistants will need to be fully transparent with candidates. This is why it is essential that the software and systems that power the chatbots are easy to update. If the tech is not agile, or if the partner that provides it is not proactive, a company can find itself out of compliance and potentially facing more problems than the bots solve in the first place.

The importance of being able to make changes to the AI-powered systems that enable chatbots also goes beyond just compliance. Candidate preferences and needs change, and so too should the virtual assistants that shepard them through the application process. Not being able to respond to the shifting desires of the candidate can set recruitment efforts behind — a bad place to be in an increasingly candidate-driven market.

Keep ahead of legislative changes

Smart companies should be keeping an eye on legislative actions that may affect the way they use AI in the recruiting process. Yes, the new California laws are just limited to one state — for now. But as legislation increasingly catches up with the technology, businesses can expect more rules to follow.

GDPR is just one example of how being behind can cause chaos. These regulations had far too many companies scrambling at the last minute to remain compliant. When it comes to AI, companies should be proactive about how legislation will affect their products now and in the future.

For HR, this means thinking critically about how each step of the recruiting process can be more transparent. Do candidates know how much personal data is being stored? If the candidate participates in a video interview, is this recording kept, is it being replayed, and for whom? Having concrete answers to these questions is critical to staying ahead and being able to comply with any future regulation or laws that may affect their use of technology.

Ensure the candidate experience is not forgotten

A positive candidate experience should be a top goal for employers, and their use of AI should support this. Companies need to keep in mind that candidate comfort with AI and chatbots will vary from industry to industry. Candidates for engineering jobs, for example, may be far more comfortable interacting with a bot than a candidate for a marketing role.

This means taking care in how interactions with the technology are disclosed. If candidates in your industry typically have no issues communicating with bots, a quick, simple disclosure akin to “Hello! I’m an automated assistant” may suffice. However, if candidates are more averse to the technology, it may be advantageous to offer an option that includes human interaction, and provide further explanation on how the technology works and what data is being disclosed.

Use transparency to your advantage

As transparency about AI becomes increasingly required, companies should use this opportunity to explain the technology to their advantage. Candidates should not only be told when they are interacting with AI, but they should also be educated about how the technology may be advantageous to their job search.

This goes beyond disclosures about bots. AI is increasingly playing a role in other aspects of the job search, and companies should take the opportunity to tell the candidate about how this may help them. If an algorithm is being used to eliminate bias in the screening process, companies find ways to proactively inform candidates. If they are using AI tech that can intelligently update the candidate on future opportunities with the company if they are not selected, companies should tout this capability as a positive as much as possible.

It’s clear that a push toward transparency surrounding the use of AI technology not only continue in HR, but be increasingly governed by new legislation. Companies that actively seek new ways to be transparent in their processes and keep the candidate experience in mind are far less likely to be caught off guard when new regulations come down — and much more likely to set themselves apart from competitors.

Authors

Nick Possley is the Head of Data Products and Engineering at AllyO where he leads technical product innovation to increase profitability and market share by working with customers and operations teams to understand the business challenges preventing profitability and market share and then solve those challenges by utilizing the best creative technical talent to innovate.

Recruit Smarter

Weekly news and industry insights delivered straight to your inbox.